The Rapid Evolution of AMRs

Autonomous mobile robots (AMRs) have changed automation. They now use advanced AI instead of simple rules. Ancient methods were basic. These methods included path-following and potential fields. They worked in still environments. But they failed in real-world conditions.

AMRs use SLAM, machine learning, and sensor fusion. This allows them to navigate complex spaces. They also interact with people and machinery.

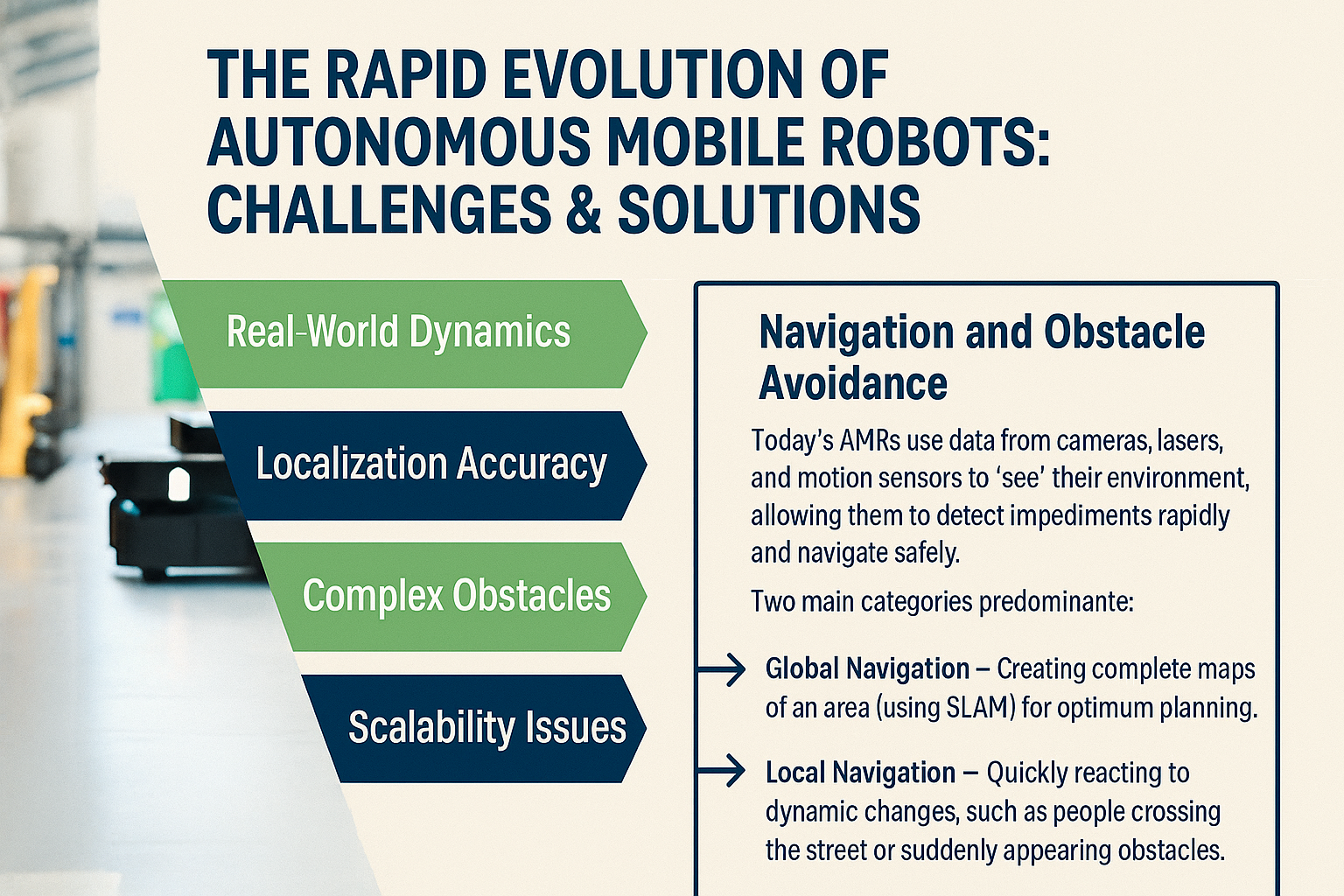

Real-World Challenges of AMR Navigation

Dynamic Environments with Human and Machine Activity

Real-world environments differ from controlled labs. These environments include factories, warehouses, and hospitals. They are dynamic and unpredictable. Workers, forklifts, and robots move. Their movements are often unpredictable.

Robots have to figure out what’s moving and what’s not and then act respectfully right away.

Say, an AMR in a warehouse is planning a route, and suddenly, a forklift is in the way. Recalculate fast and don’t get in the way.

It needs smart navigation that’s fast and safe.

Localization Accuracy in Cluttered or GPS-Denied Spaces:

AMRs mostly run inside since they can’t always get GPS signals. They use SLAM, LiDAR, and vision instead.

Sensors get messed up and aren’t as accurate when things are messy, like in warehouses or hospitals.

The robot might mess up navigating because the walls and doors all look alike.

Combining sensor data and edge computing is key for steady positioning.

Complex Obstacles with Irregular Shape, Speed, and Predictability:

A lot of older obstacle models just use simple shapes, such as boxes or cylinders. But in the real world, problems are irregular, move quickly, and are hard to foresee.

AMRs need to be able to deal with problems that don’t fit into clean mathematical models.

For instance, a human worker carrying an unequal load moves unexpectedly in comparison to a static wall or box. Robots must forecast their course rather than just detect their presence.

Deep reinforcement learning (DRL) and trajectory prediction algorithms that anticipate motion, not only prevent it, are needed.

Scalability Issues in Multi-Robot Coordination:

It is feasible to deploy a single AMR; coordinating fleets of 50–100 robots presents difficulties. The problem is to prevent congestion, accidents, and inefficiencies when several robots occupy the same place.

Say, in an Amazon-style warehouse, hundreds of AMRs must coordinate duties and routes without creating traffic congestion.

Decentralized fleet management algorithms and cloud/edge integration are required for real-time coordination.

Sensor Heterogeneity and Benchmarking Problems:

AMRs employ various sensor configurations—some prioritize LiDAR, others vision, or a combination. Because of this variety, comparing system performance becomes challenging.

The challenge is the lack of uniform standards for testing navigation algorithms in a variety of situations and sensor types.

For example, an algorithm evaluated with LiDAR in a large area may not work as well with cameras in a tight hospital hallway.

Impact: Demands for standardized assessment criteria and adaptive algorithms that can generalize across hardware configurations.

Despite significant development, AMRs continue to confront challenges like human interaction, localization, obstructions, scalability, and sensor variety, which are being addressed through AI, SLAM, and enhanced data processing for smarter, safer navigation.

Navigation and Obstacle Avoidance

Today’s AMRs use data from cameras, lasers, and motion sensors to ‘see’ their environment, allowing them to detect impediments rapidly and navigate safely.

Two main categories predominate:

Global Navigation—Creating complete maps of an area (using SLAM) for optimum planning.

Local navigation: Quickly reacting to dynamic changes, such as people crossing the street or suddenly appearing obstacles.

AMRs achieve a balance of long-term efficiency and short-term safety by combining these techniques.

Algorithms and Advanced Technologies

Research demonstrates that cutting-edge AMRs rely on a mix of AI, computer vision, and optimization algorithms:

SLAM (Simultaneous Localization and Mapping):

Robots employ a method known as Simultaneous Localization and Mapping (SLAM), in which they create maps of their surroundings while tracking their own location within them.

This dual mechanism permits them to simultaneously grasp where they are and what is around them. As a consequence, AMRs may adapt to unfamiliar situations or places that vary often, such as warehouses with shifting merchandise or hospitals with moving equipment, without the requirement for pre-programmed routes.

Machine Learning & Deep Reinforcement Learning:

Machine Learning (ML) and Deep Reinforcement Learning (DRL) enable AMRs to move beyond predefined rules and static programming. Robots might analyze patterns from past experiences—like how people move in a crowded warehouse or how forklifts operate on particular routes—instead of merely reacting to obstacles. This would allow them to make better decisions in the future.

DRL, for example, enables robots to ‘learn by trial and error’ in simulated or real-world situations, gradually refining navigation tactics. Over time, this implies that AMRs anticipate impediments, take safer, quicker pathways, and adjust smoothly to uncertain, dynamic surroundings.

Advanced path-planning methods like PRMs and RRTs:

Probabilistic Road Maps (PRMs) and Rapidly Exploring Random Trees (RRTs) are sophisticated algorithms that assist AMRs in finding effective pathways across complicated, crowded terrain. PRMs operate by randomly selecting points in an area and linking them into a roadmap of alternative pathways, which the robot may then utilize to avoid obstacles. This strategy is beneficial in static contexts where the robot must pre-plan paths through big, complex locations.

RRTs, on the other hand, are extremely successful in dynamic or unfamiliar contexts. They swiftly develop a ‘tree’ of alternative pathways from the robot’s starting position, investigating viable paths until they reach their destination. When PRMs and RRTs work together, they give AMRs adaptable, scalable navigation techniques that allow them to function in confined, obstacle-rich areas such as warehouses and hospitals, as well as urban areas, alongside unlocking factory floors.

Edge Computing:

AMRs can now analyze data on or close to the robot instead of relying on remote cloud servers thanks to edge computing. As a result, delays are reduced, enabling snap decisions for safety, obstacle avoidance, and routing in dynamic environments.

Together, these improvements improve autonomy, safety, and efficiency in uncertain real-world circumstances.

Data Analysis and Processing

Navigation is about interpreting the data, not just using sensors. Contemporary AMRs examine vast amounts of data to:

Recognize objects and predict motion:

AMRs employ computer vision and sensor data to recognize things in their environment, such as walls, pallets, forklifts, and humans. Aside from identifying them, powerful algorithms forecast how these things will travel. For example, a robot can predict whether a worker will go straight or turn, allowing it to plan ahead of time rather than reacting at the last minute.

Fuse multiple data streams for reliable mapping:

Robots hardly rely on only one sensor. AMRs obtain a more precise and dependable image of their surroundings by integrating LiDAR scans, camera feeds, and inertial measurements. This “sensor fusion” helps to overcome the limitations of individual sensors—for example, cameras struggle in low light, while LiDAR can overlook translucent surfaces like glass.They collaborate to provide all-encompassing situational awareness.

Use reinforcement learning to optimize path planning and obstacle avoidance:

Instead of adhering to predefined rules, AMRs can discover the optimum navigation tactics over time. Reinforcement learning allows robots to “experiment” with multiple courses in simulations or actual situations, gaining feedback on their success or failure. They slowly improve their selections, learning how to select routes that are both faster and safer, even in complicated or uncertain environments.

Automation and SLAM: The Backbone of AMRs

SLAM is the game-changing technology that powers the current wave of AMRs. SLAM enables robots to function in areas where predefined maps are unavailable or when conditions change often. When combined with SLAM and edge computing, these technologies enable real-time, quick, and precise decision-making.

SLAM allows AMRs to adapt to layout changes, communicate with other robots, and promote Industry 4.0 objectives by seamlessly integrating into automated systems.

The Future of AMRs

AI-powered cognition, 5G-enabled connection, and more collaboration between people and robots will propel AMR progress further.

AMRs, along with predictive intelligence, are altering the future of labor and automation by allowing hyper-optimized fleet management and cross-industry adoption across several industries.

The development of AMRs exemplifies the ideal balance of modern algorithms, automation, and real-world adaptation. From SLAM to obstacle avoidance, each advancement has moved us closer to completely autonomous systems capable of transforming sectors throughout the world.